kafka:kafka_2.13-3.5.1

NOTE: Your local environment must have Java 8+ installed.

Apache Kafka can be started using ZooKeeper or KRaft. To get started with either configuration follow one the sections below but not both.

1 Windows单机

1.1 Kafka with KRaft

1.1.1 Generate a Cluster UUID

%kafka_home%\bin\windows\kafka-storage.bat random-uuidKAFKA_CLUSTER_ID:7W5iXjO2SESIaSv770eIyA

1.1.2 Format Log Directories

server.properties采用默认配置:

############################# Server Basics #############################

# The role of this server. Setting this puts us in KRaft mode

process.roles=broker,controller

# The node id associated with this instance's roles

node.id=1

# The connect string for the controller quorum

controller.quorum.voters=1@localhost:9093

############################# Socket Server Settings #############################

# The address the socket server listens on.

# Combined nodes (i.e. those with `process.roles=broker,controller`) must list the controller listener here at a minimum.

# If the broker listener is not defined, the default listener will use a host name that is equal to the value of java.net.InetAddress.getCanonicalHostName(),

# with PLAINTEXT listener name, and port 9092.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://:9092,CONTROLLER://:9093

# Name of listener used for communication between brokers.

inter.broker.listener.name=PLAINTEXT

# Listener name, hostname and port the broker will advertise to clients.

# If not set, it uses the value for "listeners".

advertised.listeners=PLAINTEXT://localhost:9092

# A comma-separated list of the names of the listeners used by the controller.

# If no explicit mapping set in `listener.security.protocol.map`, default will be using PLAINTEXT protocol

# This is required if running in KRaft mode.

controller.listener.names=CONTROLLER

############################# Log Basics #############################

# A comma separated list of directories under which to store log files

log.dirs=/tmp/kraft-combined-logs%kafka_home%\bin\windows\kafka-storage.bat format -t 7W5iXjO2SESIaSv770eIyA -c ../../config/kraft/server.properties1.1.3 Start the Kafka Server

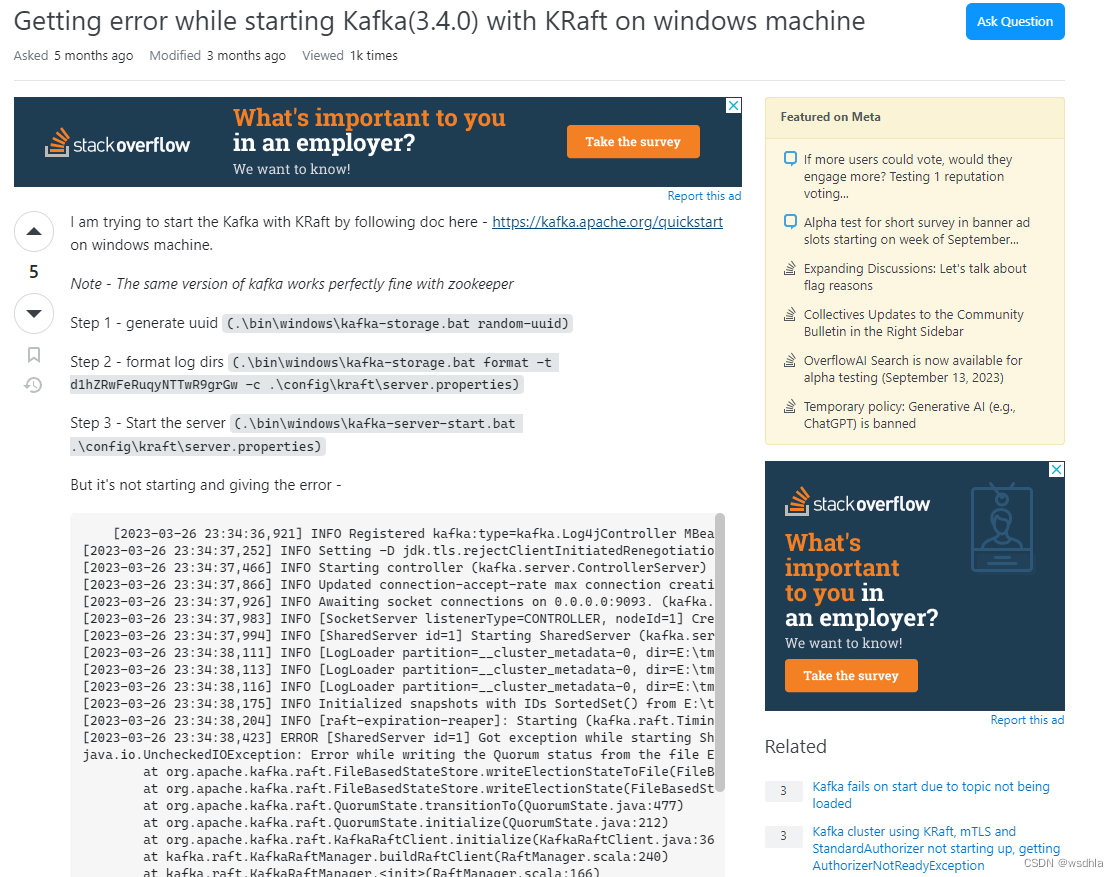

%kafka_home%\bin\windows\kafka-server-start.bat ../../config/kraft/server.properties报错了

[2023-09-20 14:41:35,667] ERROR [SharedServer id=1] Got exception while starting SharedServer (kafka.server.SharedServer)

java.io.UncheckedIOException: Error while writing the Quorum status from the file C:\tmp\kraft-combined-logs\__cluster_metadata-0\quorum-state

at org.apache.kafka.raft.FileBasedStateStore.writeElectionStateToFile(FileBasedStateStore.java:155)

at org.apache.kafka.raft.FileBasedStateStore.writeElectionState(FileBasedStateStore.java:128)

at org.apache.kafka.raft.QuorumState.transitionTo(QuorumState.java:477)

at org.apache.kafka.raft.QuorumState.initialize(QuorumState.java:212)

at org.apache.kafka.raft.KafkaRaftClient.initialize(KafkaRaftClient.java:370)

at kafka.raft.KafkaRaftManager.buildRaftClient(RaftManager.scala:248)

at kafka.raft.KafkaRaftManager.<init>(RaftManager.scala:174)

at kafka.server.SharedServer.start(SharedServer.scala:247)

at kafka.server.SharedServer.startForController(SharedServer.scala:129)

at kafka.server.ControllerServer.startup(ControllerServer.scala:197)

at kafka.server.KafkaRaftServer.$anonfun$startup$1(KafkaRaftServer.scala:95)

at kafka.server.KafkaRaftServer.$anonfun$startup$1$adapted(KafkaRaftServer.scala:95)

at scala.Option.foreach(Option.scala:437)

at kafka.server.KafkaRaftServer.startup(KafkaRaftServer.scala:95)

at kafka.Kafka$.main(Kafka.scala:113)

at kafka.Kafka.main(Kafka.scala)

Caused by: java.nio.file.FileSystemException: C:\tmp\kraft-combined-logs\__cluster_metadata-0\quorum-state.tmp -> C:\tmp\kraft-combined-logs\__cluster_metadata-0\quorum-state: 另一个程序正在使用此文件,进程无法访问。

at sun.nio.fs.WindowsException.translateToIOException(WindowsException.java:86)

at sun.nio.fs.WindowsException.rethrowAsIOException(WindowsException.java:97)

at sun.nio.fs.WindowsFileCopy.move(WindowsFileCopy.java:387)

at sun.nio.fs.WindowsFileSystemProvider.move(WindowsFileSystemProvider.java:287)

at java.nio.file.Files.move(Files.java:1395)

at org.apache.kafka.common.utils.Utils.atomicMoveWithFallback(Utils.java:950)

at org.apache.kafka.common.utils.Utils.atomicMoveWithFallback(Utils.java:933)

at org.apache.kafka.raft.FileBasedStateStore.writeElectionStateToFile(FileBasedStateStore.java:152)

... 15 more

Suppressed: java.nio.file.FileSystemException: C:\tmp\kraft-combined-logs\__cluster_metadata-0\quorum-state.tmp -> C:\tmp\kraft-combined-logs\__cluster_metadata-0\quorum-state: 另一个程序正在使用此文件,进程无法访问。

at sun.nio.fs.WindowsException.translateToIOException(WindowsException.java:86)

at sun.nio.fs.WindowsException.rethrowAsIOException(WindowsException.java:97)

at sun.nio.fs.WindowsFileCopy.move(WindowsFileCopy.java:301)

at sun.nio.fs.WindowsFileSystemProvider.move(WindowsFileSystemProvider.java:287)

at java.nio.file.Files.move(Files.java:1395)

at org.apache.kafka.common.utils.Utils.atomicMoveWithFallback(Utils.java:947)

... 17 more

[2023-09-20 14:41:35,671] INFO [ControllerServer id=1] Waiting for controller quorum voters future (kafka.server.ControllerServer)

[2023-09-20 14:41:35,673] INFO [ControllerServer id=1] Finished waiting for controller quorum voters future (kafka.server.ControllerServer)

[2023-09-20 14:41:35,680] ERROR Encountered fatal fault: caught exception (org.apache.kafka.server.fault.ProcessTerminatingFaultHandler)

java.lang.NullPointerException

at kafka.server.ControllerServer.startup(ControllerServer.scala:210)

at kafka.server.KafkaRaftServer.$anonfun$startup$1(KafkaRaftServer.scala:95)

at kafka.server.KafkaRaftServer.$anonfun$startup$1$adapted(KafkaRaftServer.scala:95)

at scala.Option.foreach(Option.scala:437)

at kafka.server.KafkaRaftServer.startup(KafkaRaftServer.scala:95)

at kafka.Kafka$.main(Kafka.scala:113)

at kafka.Kafka.main(Kafka.scala)各种搜索也无能为力,只能搜到问题,搜不到答案

在Windows上就这样草草了之了......我准备放弃了

1.2 Kafka with ZooKeeper

1.2.1 Start the ZooKeeper service

%kafka_home%\bin\windows\zookeeper-server-start.bat ../../config/zookeeper.properties1.2.2 Start the Kafka broker service

%kafka_home%\bin\windows\kafka-server-start.bat ../../config/server.properties2 Windows集群(同一台物理机)

2.1 Kafka with KRaft

2.1.1 拷贝3份kafka应用

2.1.2 分别修改配置文件

kafka_cluster_node1\config\kraft\server.properties

process.roles=broker,controller

node.id=1

controller.quorum.voters=1@localhost:9093,2@localhost:9093,3@localhost:9093

listeners=PLAINTEXT://localhost:9092,CONTROLLER://localhost:9093

advertised.listeners=PLAINTEXT://localhost:9092

log.dirs=/kafka_cluster_node1/logs/tmp/kraft-combined-logskafka_cluster_node2\config\kraft\server.properties

process.roles=broker,controller

node.id=2

controller.quorum.voters=1@localhost:9093,2@localhost:9093,3@localhost:9093

listeners=PLAINTEXT://localhost:9092,CONTROLLER://localhost:9093

advertised.listeners=PLAINTEXT://localhost:9092

log.dirs=/kafka_cluster_node2/logs/tmp/kraft-combined-logskafka_cluster_node3\config\kraft\server.properties

process.roles=broker,controller

node.id=3

controller.quorum.voters=1@localhost:9093,2@localhost:9093,3@localhost:9093

listeners=PLAINTEXT://localhost:9092,CONTROLLER://localhost:9093

advertised.listeners=PLAINTEXT://localhost:9092

log.dirs=/kafka_cluster_node3/logs/tmp/kraft-combined-logs2.1.3 Generate a Cluster UUID

使用其中任意一个目录生成集群ID

kafka_cluster_node1\bin\windows\kafka-storage.bat random-uuid2.1.4 Format Log Directories

分别初始化日志目录

kafka_cluster_node1\bin\kafka-storage.bat format -t IFxmE1eDTfSOX-U_ZV7ntw -c ../../config/kraft/server.properties

kafka_cluster_node2\bin\kafka-storage.bat format -t IFxmE1eDTfSOX-U_ZV7ntw -c ../../config/kraft/server.properties

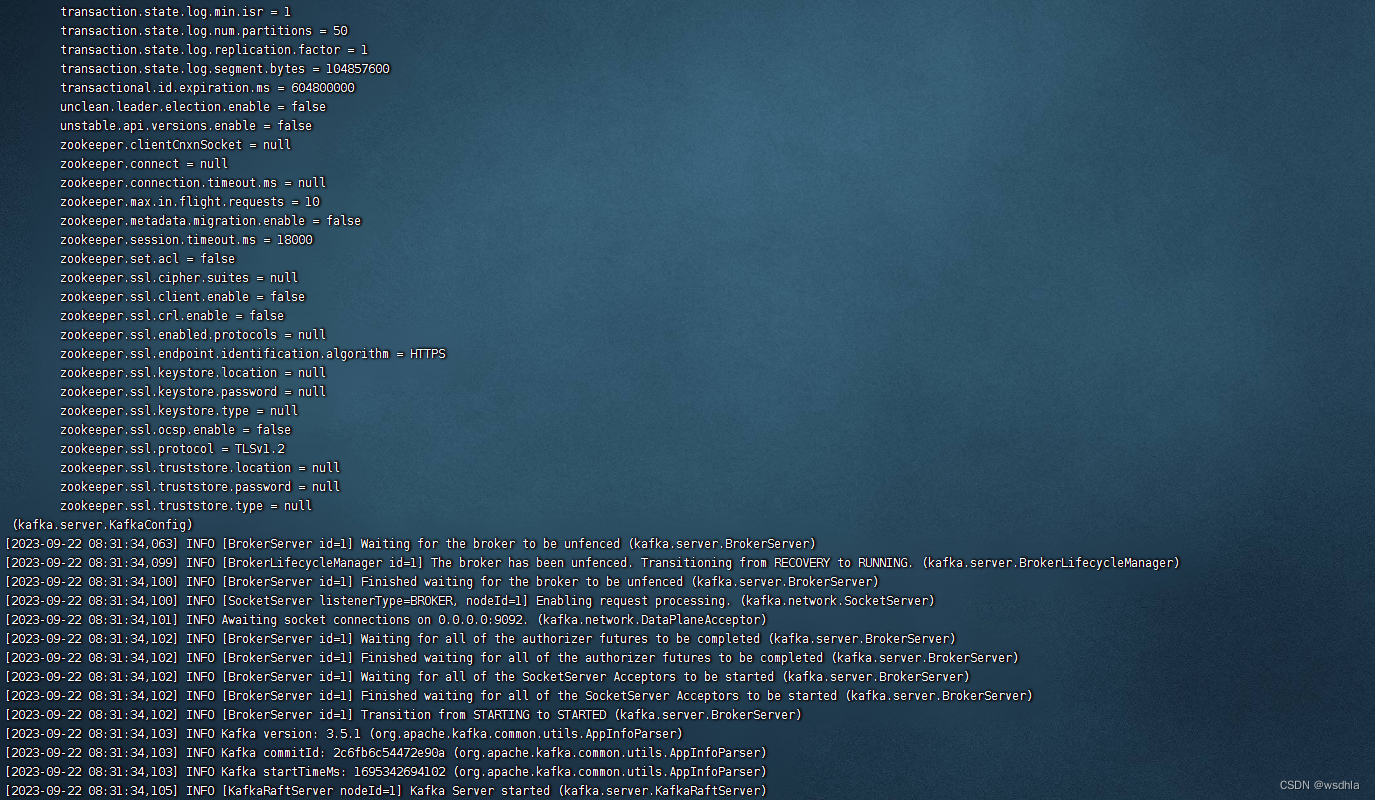

kafka_cluster_node3\bin\kafka-storage.bat format -t IFxmE1eDTfSOX-U_ZV7ntw -c ../../config/kraft/server.properties2.1.5 Start the Kafka Server

分别启动服务

kafka_cluster_node1\bin\kafka-server-start.bat ../../config/kraft/server.properties

kafka_cluster_node2\bin\kafka-server-start.bat ../../config/kraft/server.properties

kafka_cluster_node3\bin\kafka-server-start.bat ../../config/kraft/server.properties依然报错!!!

kraft到底支不支持Windows啊???还是kafka版本的问题???

放弃!!!

2.2 Kafka with ZooKeeper

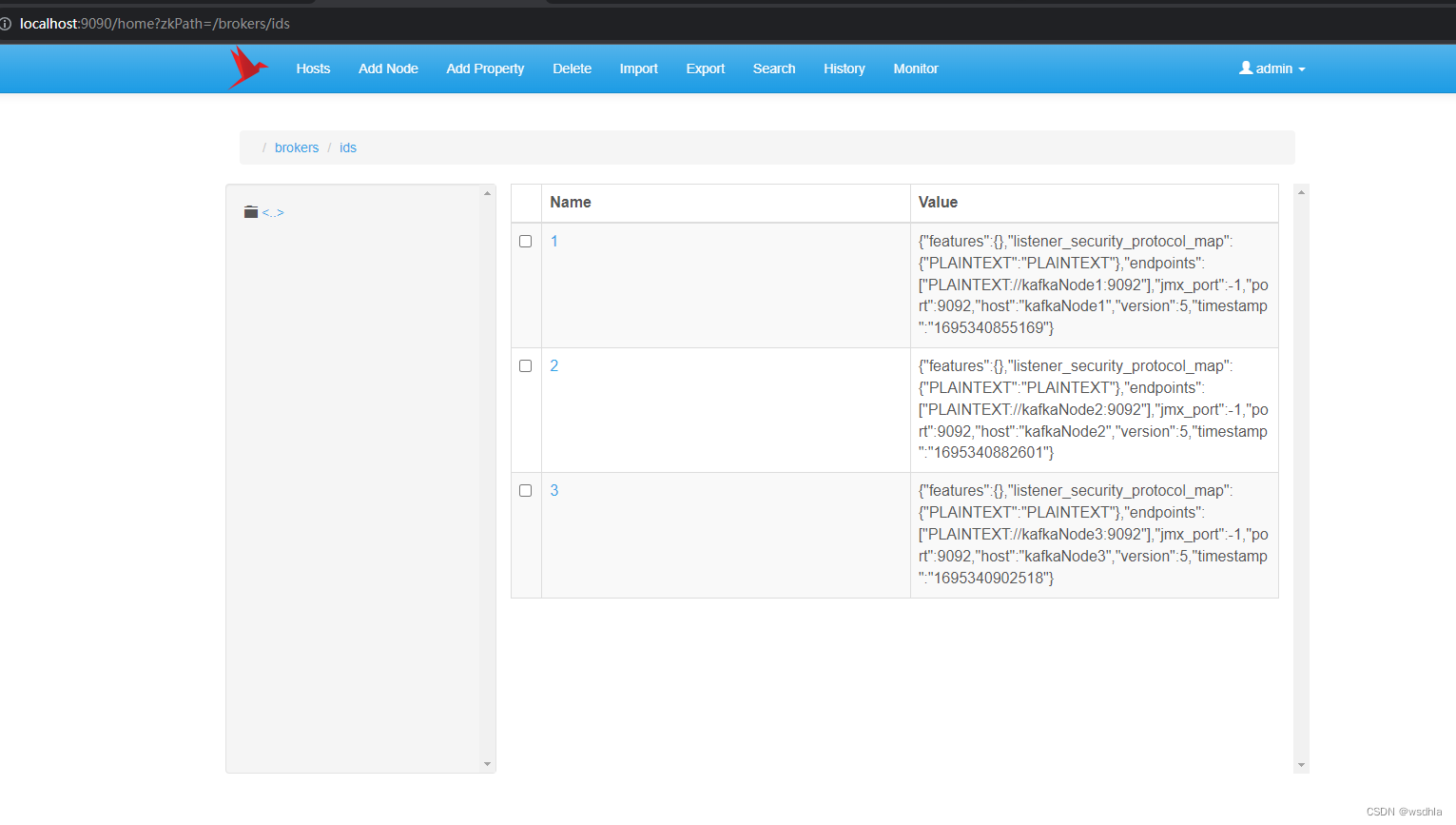

需要1个zk节点,3个kafka节点。

2.2.1 同样需要3份kafka应用

2.2.2 分别修改配置文件

kafka_cluster_node1\config\server.properties

broker.id=1

zookeeper.connect=localhost:2181

listeners=PLAINTEXT://:9092

advertised.listeners=PLAINTEXT://kafkaNode1:9092

log.dirs=/kafka_cluster_node1/logs/tmp/kafka-logskafka_cluster_node2\config\server.properties

broker.id=2

zookeeper.connect=localhost:2181

listeners=PLAINTEXT://:9093

advertised.listeners=PLAINTEXT://kafkaNode2:9092

log.dirs=/kafka_cluster_node2/logs/tmp/kafka-logskafka_cluster_node3\config\server.properties

broker.id=3

zookeeper.connect=localhost:2181

listeners=PLAINTEXT://:9094

advertised.listeners=PLAINTEXT://kafkaNode3:9092

log.dirs=/kafka_cluster_node3/logs/tmp/kafka-logs2.2.3 Start the ZooKeeper service

可以启动独立部署的zk,也可以用kafka自带的zk

%kafka_home%\bin\windows\zookeeper-server-start.bat ../../config/zookeeper.properties2.2.4 Start the Kafka broker service

分别启动kafka节点

kafka_cluster_node1\bin\windows\kafka-server-start.bat ../../config/server.properties

kafka_cluster_node2\bin\windows\kafka-server-start.bat ../../config/server.properties

kafka_cluster_node3\bin\windows\kafka-server-start.bat ../../config/server.properties

集群部署成功!!!

但是应用发送消息尚有问题,留个hook在此。

3 Linux单机

3.1 Kafka with KRaft

操作与windows一致,一把就成功,没有任何问题。(果然!!!)

3.2 Kafka with ZooKeeper

还没有验证。

4 Windows集群(3台虚拟机)

4.1 Kafka with KRaft

常见异常

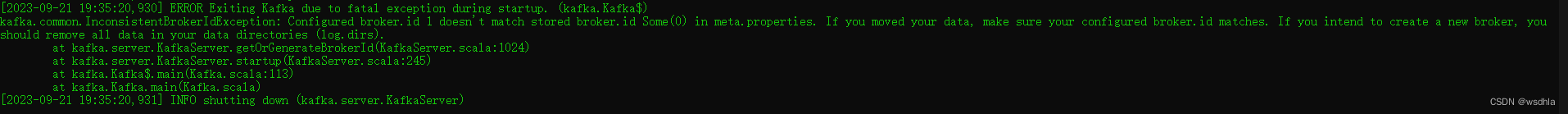

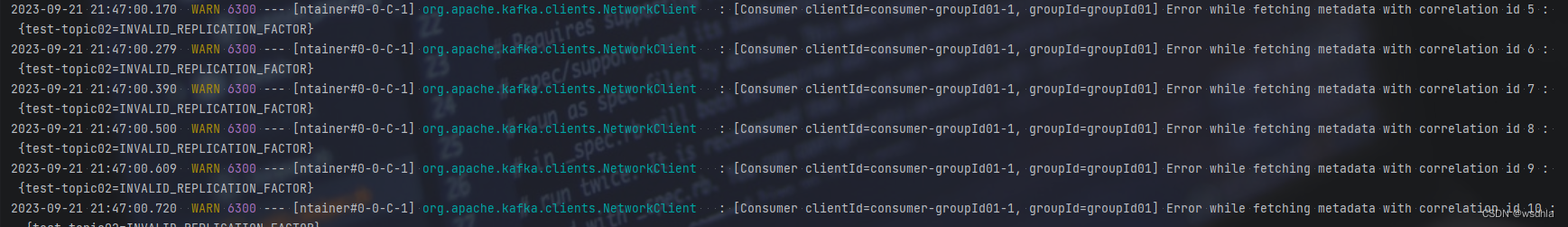

异常1:

这是因为单节点启动时产生的 tmp\kafka-logs\meta.properties文件中broker.id与现在集群的各节点不一致的原因(如果前面各节点输出的日志目录不同也不会产生这个问题)

#Thu Sep 21 16:56:50 CST 2023

cluster.id=bkuCLgUiRYe5CSnptF6ZVQ

version=0

broker.id=0可以修改为一致或者直接删除。

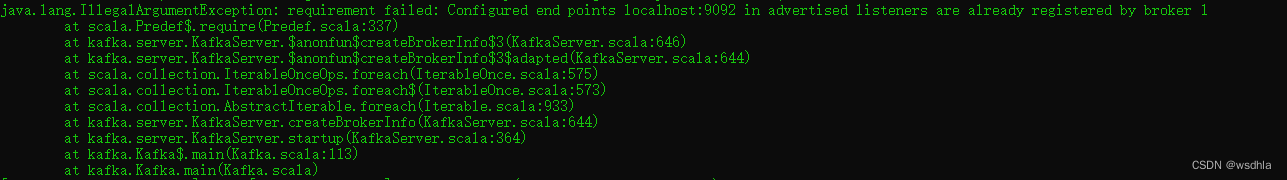

异常2:

由于是在同一个机器上做集群,advertised.listeners配置需要区分,可以通过修改etc/hosts实现

localhost kafkaNode1

localhost kafkaNode2

localhost kafkaNode3异常3:

还是因为在同一个主机上做集群的原因,listeners端口要区分开,可以分别设置为9092、9093、9094解决。

异常4:

hosts文件中配置域名映射时要使用127.0.0.1,不能使用localhost。

异常5:

应用端异常,说明spring.kafka.bootstrap-servers配置错误,应该配置advertised.listeners的地址。

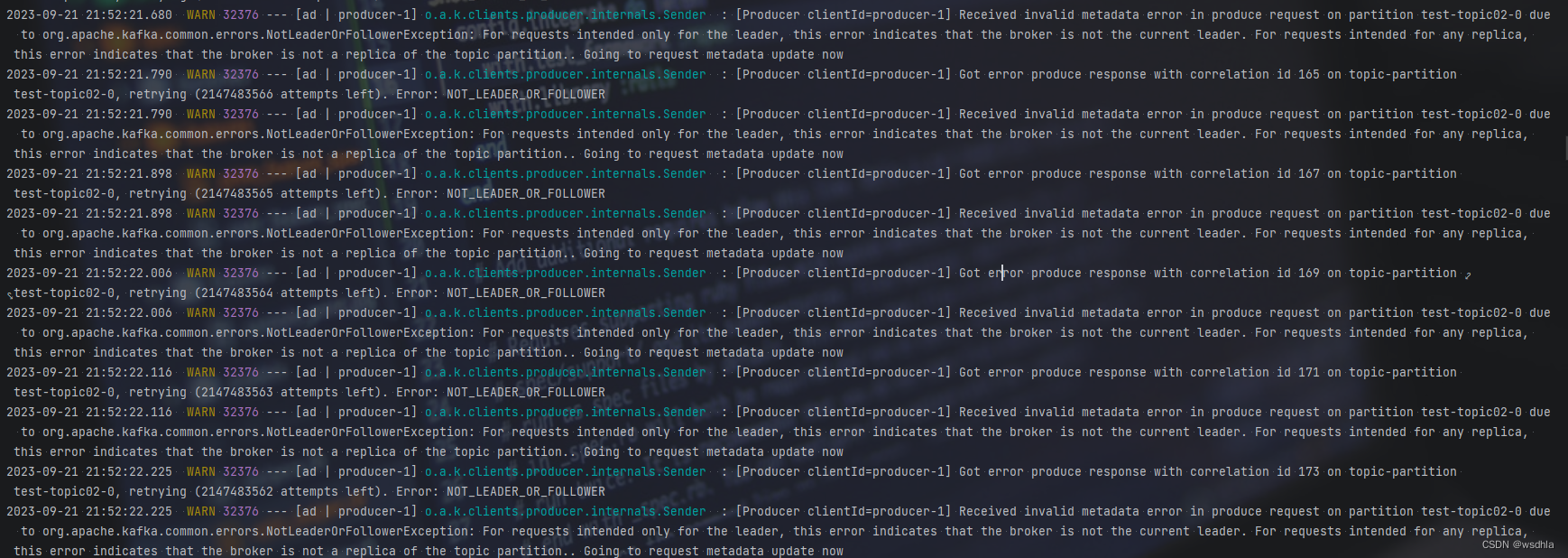

异常6:

应用端发送消息时出现异常Error: NOT_LEADER_OR_FOLLOWER,

悬而未决

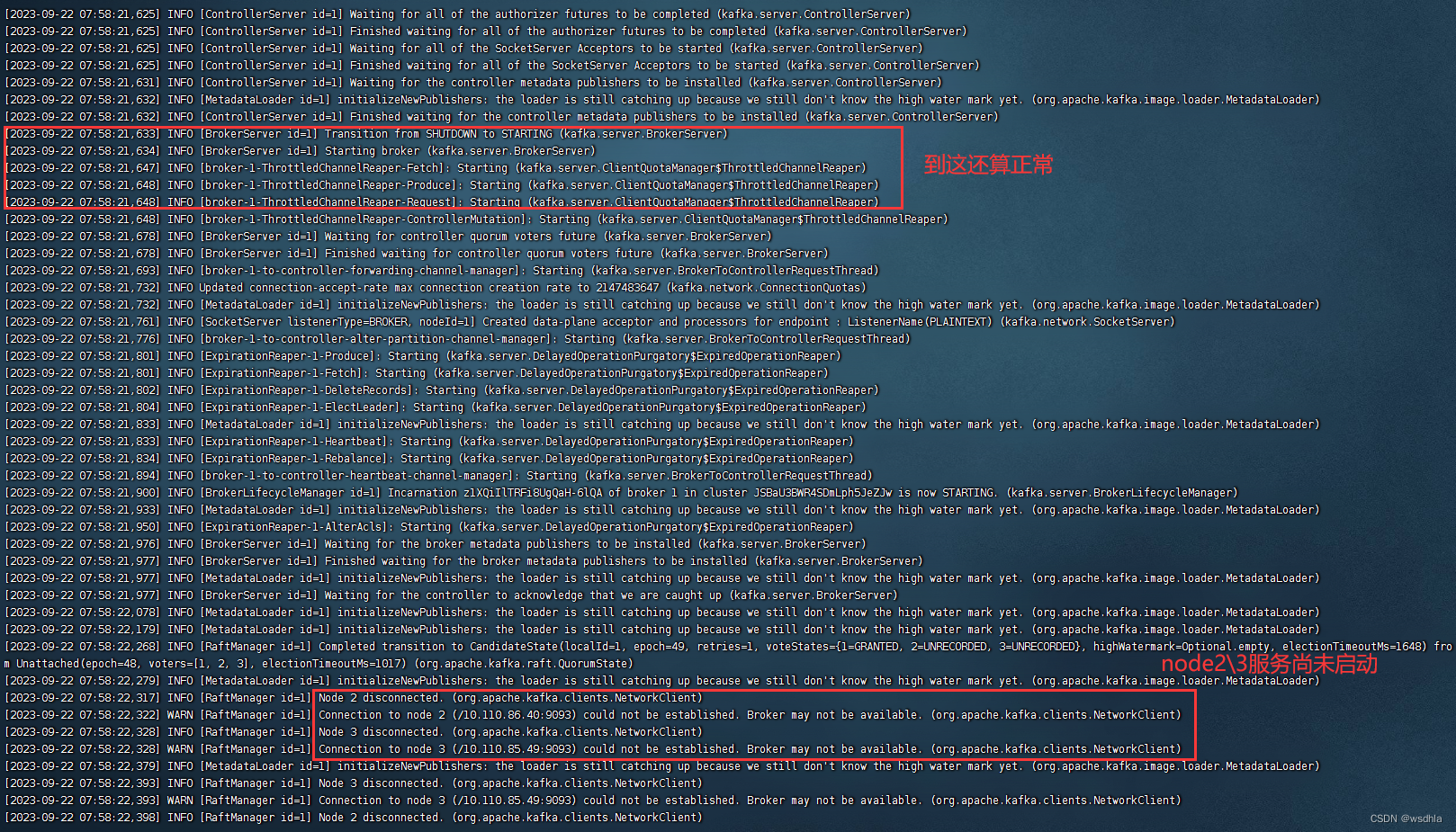

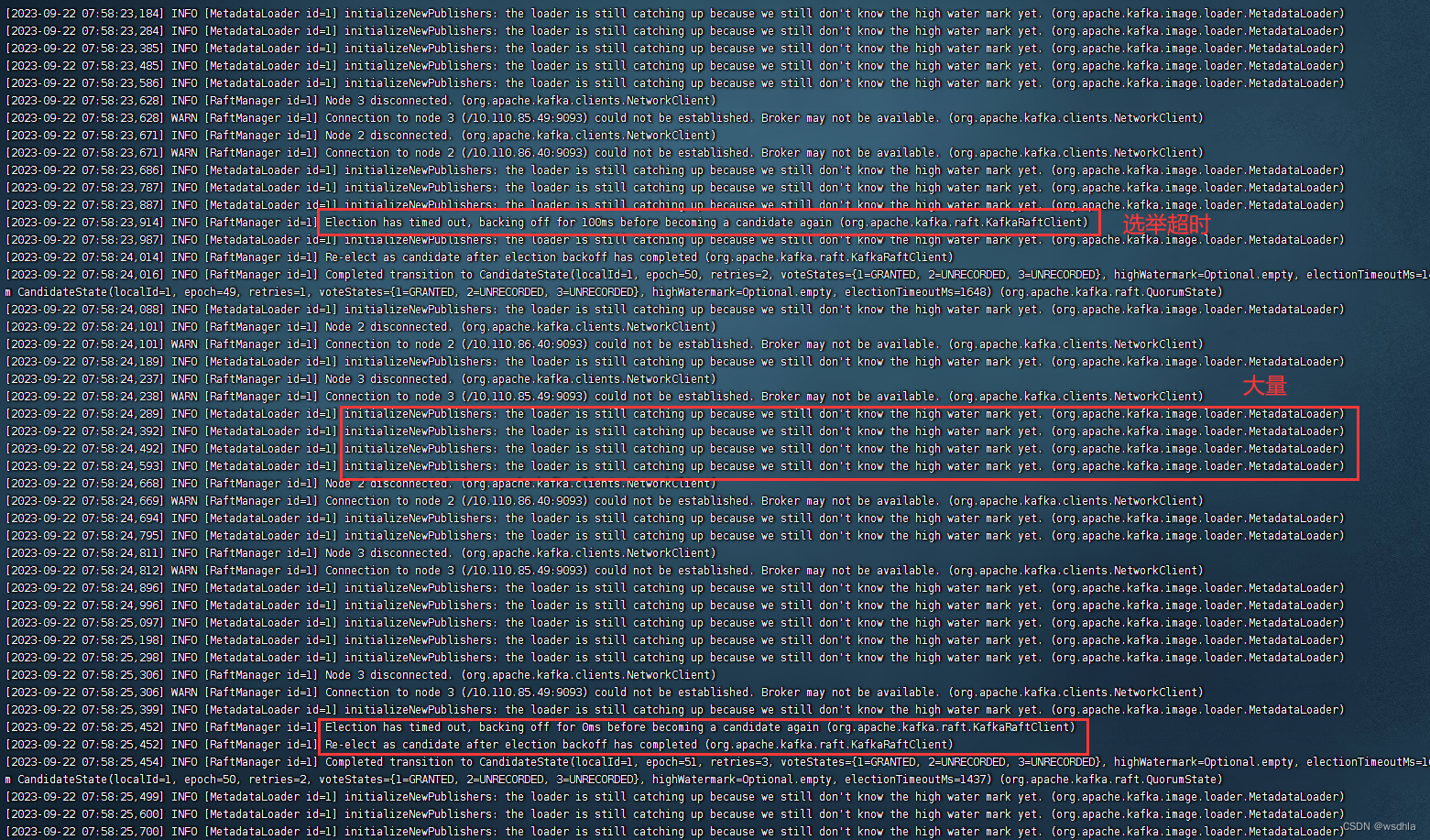

异常7:

linux集群环境

就是防火墙原因,三个节点9093端口不通,添加iptables规则,问题解决。

Error Codes

官方文档:Apache Kafka

We use numeric codes to indicate what problem occurred on the server. These can be translated by the client into exceptions or whatever the appropriate error handling mechanism in the client language. Here is a table of the error codes currently in use:

| ERROR | CODE | RETRIABLE | DESCRIPTION |

|---|---|---|---|

| UNKNOWN_SERVER_ERROR | -1 | False | The server experienced an unexpected error when processing the request. |

| NONE | 0 | False | |

| OFFSET_OUT_OF_RANGE | 1 | False | The requested offset is not within the range of offsets maintained by the server. |

| CORRUPT_MESSAGE | 2 | True | This message has failed its CRC checksum, exceeds the valid size, has a null key for a compacted topic, or is otherwise corrupt. |

| UNKNOWN_TOPIC_OR_PARTITION | 3 | True | This server does not host this topic-partition. |

| INVALID_FETCH_SIZE | 4 | False | The requested fetch size is invalid. |

| LEADER_NOT_AVAILABLE | 5 | True | There is no leader for this topic-partition as we are in the middle of a leadership election. |

| NOT_LEADER_OR_FOLLOWER | 6 | True | For requests intended only for the leader, this error indicates that the broker is not the current leader. For requests intended for any replica, this error indicates that the broker is not a replica of the topic partition. |

| REQUEST_TIMED_OUT | 7 | True | The request timed out. |

| BROKER_NOT_AVAILABLE | 8 | False | The broker is not available. |

| REPLICA_NOT_AVAILABLE | 9 | True | The replica is not available for the requested topic-partition. Produce/Fetch requests and other requests intended only for the leader or follower return NOT_LEADER_OR_FOLLOWER if the broker is not a replica of the topic-partition. |

| MESSAGE_TOO_LARGE | 10 | False | The request included a message larger than the max message size the server will accept. |

| STALE_CONTROLLER_EPOCH | 11 | False | The controller moved to another broker. |

| OFFSET_METADATA_TOO_LARGE | 12 | False | The metadata field of the offset request was too large. |

| NETWORK_EXCEPTION | 13 | True | The server disconnected before a response was received. |

| COORDINATOR_LOAD_IN_PROGRESS | 14 | True | The coordinator is loading and hence can't process requests. |

| COORDINATOR_NOT_AVAILABLE | 15 | True | The coordinator is not available. |

| NOT_COORDINATOR | 16 | True | This is not the correct coordinator. |

| INVALID_TOPIC_EXCEPTION | 17 | False | The request attempted to perform an operation on an invalid topic. |

| RECORD_LIST_TOO_LARGE | 18 | False | The request included message batch larger than the configured segment size on the server. |

| NOT_ENOUGH_REPLICAS | 19 | True | Messages are rejected since there are fewer in-sync replicas than required. |

| NOT_ENOUGH_REPLICAS_AFTER_APPEND | 20 | True | Messages are written to the log, but to fewer in-sync replicas than required. |

| INVALID_REQUIRED_ACKS | 21 | False | Produce request specified an invalid value for required acks. |

| ILLEGAL_GENERATION | 22 | False | Specified group generation id is not valid. |

| INCONSISTENT_GROUP_PROTOCOL | 23 | False | The group member's supported protocols are incompatible with those of existing members or first group member tried to join with empty protocol type or empty protocol list. |

| INVALID_GROUP_ID | 24 | False | The configured groupId is invalid. |

| UNKNOWN_MEMBER_ID | 25 | False | The coordinator is not aware of this member. |

| INVALID_SESSION_TIMEOUT | 26 | False | The session timeout is not within the range allowed by the broker (as configured by group.min.session.timeout.ms and group.max.session.timeout.ms). |

| REBALANCE_IN_PROGRESS | 27 | False | The group is rebalancing, so a rejoin is needed. |

| INVALID_COMMIT_OFFSET_SIZE | 28 | False | The committing offset data size is not valid. |

| TOPIC_AUTHORIZATION_FAILED | 29 | False | Topic authorization failed. |

| GROUP_AUTHORIZATION_FAILED | 30 | False | Group authorization failed. |

| CLUSTER_AUTHORIZATION_FAILED | 31 | False | Cluster authorization failed. |

| INVALID_TIMESTAMP | 32 | False | The timestamp of the message is out of acceptable range. |

| UNSUPPORTED_SASL_MECHANISM | 33 | False | The broker does not support the requested SASL mechanism. |

| ILLEGAL_SASL_STATE | 34 | False | Request is not valid given the current SASL state. |

| UNSUPPORTED_VERSION | 35 | False | The version of API is not supported. |

| TOPIC_ALREADY_EXISTS | 36 | False | Topic with this name already exists. |

| INVALID_PARTITIONS | 37 | False | Number of partitions is below 1. |

| INVALID_REPLICATION_FACTOR | 38 | False | Replication factor is below 1 or larger than the number of available brokers. |

| INVALID_REPLICA_ASSIGNMENT | 39 | False | Replica assignment is invalid. |

| INVALID_CONFIG | 40 | False | Configuration is invalid. |

| NOT_CONTROLLER | 41 | True | This is not the correct controller for this cluster. |

| INVALID_REQUEST | 42 | False | This most likely occurs because of a request being malformed by the client library or the message was sent to an incompatible broker. See the broker logs for more details. |

| UNSUPPORTED_FOR_MESSAGE_FORMAT | 43 | False | The message format version on the broker does not support the request. |

| POLICY_VIOLATION | 44 | False | Request parameters do not satisfy the configured policy. |

| OUT_OF_ORDER_SEQUENCE_NUMBER | 45 | False | The broker received an out of order sequence number. |

| DUPLICATE_SEQUENCE_NUMBER | 46 | False | The broker received a duplicate sequence number. |

| INVALID_PRODUCER_EPOCH | 47 | False | Producer attempted to produce with an old epoch. |

| INVALID_TXN_STATE | 48 | False | The producer attempted a transactional operation in an invalid state. |

| INVALID_PRODUCER_ID_MAPPING | 49 | False | The producer attempted to use a producer id which is not currently assigned to its transactional id. |

| INVALID_TRANSACTION_TIMEOUT | 50 | False | The transaction timeout is larger than the maximum value allowed by the broker (as configured by transaction.max.timeout.ms). |

| CONCURRENT_TRANSACTIONS | 51 | True | The producer attempted to update a transaction while another concurrent operation on the same transaction was ongoing. |

| TRANSACTION_COORDINATOR_FENCED | 52 | False | Indicates that the transaction coordinator sending a WriteTxnMarker is no longer the current coordinator for a given producer. |

| TRANSACTIONAL_ID_AUTHORIZATION_FAILED | 53 | False | Transactional Id authorization failed. |

| SECURITY_DISABLED | 54 | False | Security features are disabled. |

| OPERATION_NOT_ATTEMPTED | 55 | False | The broker did not attempt to execute this operation. This may happen for batched RPCs where some operations in the batch failed, causing the broker to respond without trying the rest. |

| KAFKA_STORAGE_ERROR | 56 | True | Disk error when trying to access log file on the disk. |

| LOG_DIR_NOT_FOUND | 57 | False | The user-specified log directory is not found in the broker config. |

| SASL_AUTHENTICATION_FAILED | 58 | False | SASL Authentication failed. |

| UNKNOWN_PRODUCER_ID | 59 | False | This exception is raised by the broker if it could not locate the producer metadata associated with the producerId in question. This could happen if, for instance, the producer's records were deleted because their retention time had elapsed. Once the last records of the producerId are removed, the producer's metadata is removed from the broker, and future appends by the producer will return this exception. |

| REASSIGNMENT_IN_PROGRESS | 60 | False | A partition reassignment is in progress. |

| DELEGATION_TOKEN_AUTH_DISABLED | 61 | False | Delegation Token feature is not enabled. |

| DELEGATION_TOKEN_NOT_FOUND | 62 | False | Delegation Token is not found on server. |

| DELEGATION_TOKEN_OWNER_MISMATCH | 63 | False | Specified Principal is not valid Owner/Renewer. |

| DELEGATION_TOKEN_REQUEST_NOT_ALLOWED | 64 | False | Delegation Token requests are not allowed on PLAINTEXT/1-way SSL channels and on delegation token authenticated channels. |

| DELEGATION_TOKEN_AUTHORIZATION_FAILED | 65 | False | Delegation Token authorization failed. |

| DELEGATION_TOKEN_EXPIRED | 66 | False | Delegation Token is expired. |

| INVALID_PRINCIPAL_TYPE | 67 | False | Supplied principalType is not supported. |

| NON_EMPTY_GROUP | 68 | False | The group is not empty. |

| GROUP_ID_NOT_FOUND | 69 | False | The group id does not exist. |

| FETCH_SESSION_ID_NOT_FOUND | 70 | True | The fetch session ID was not found. |

| INVALID_FETCH_SESSION_EPOCH | 71 | True | The fetch session epoch is invalid. |

| LISTENER_NOT_FOUND | 72 | True | There is no listener on the leader broker that matches the listener on which metadata request was processed. |

| TOPIC_DELETION_DISABLED | 73 | False | Topic deletion is disabled. |

| FENCED_LEADER_EPOCH | 74 | True | The leader epoch in the request is older than the epoch on the broker. |

| UNKNOWN_LEADER_EPOCH | 75 | True | The leader epoch in the request is newer than the epoch on the broker. |

| UNSUPPORTED_COMPRESSION_TYPE | 76 | False | The requesting client does not support the compression type of given partition. |

| STALE_BROKER_EPOCH | 77 | False | Broker epoch has changed. |

| OFFSET_NOT_AVAILABLE | 78 | True | The leader high watermark has not caught up from a recent leader election so the offsets cannot be guaranteed to be monotonically increasing. |

| MEMBER_ID_REQUIRED | 79 | False | The group member needs to have a valid member id before actually entering a consumer group. |

| PREFERRED_LEADER_NOT_AVAILABLE | 80 | True | The preferred leader was not available. |

| GROUP_MAX_SIZE_REACHED | 81 | False | The consumer group has reached its max size. |

| FENCED_INSTANCE_ID | 82 | False | The broker rejected this static consumer since another consumer with the same group.instance.id has registered with a different member.id. |

| ELIGIBLE_LEADERS_NOT_AVAILABLE | 83 | True | Eligible topic partition leaders are not available. |

| ELECTION_NOT_NEEDED | 84 | True | Leader election not needed for topic partition. |

| NO_REASSIGNMENT_IN_PROGRESS | 85 | False | No partition reassignment is in progress. |

| GROUP_SUBSCRIBED_TO_TOPIC | 86 | False | Deleting offsets of a topic is forbidden while the consumer group is actively subscribed to it. |

| INVALID_RECORD | 87 | False | This record has failed the validation on broker and hence will be rejected. |

| UNSTABLE_OFFSET_COMMIT | 88 | True | There are unstable offsets that need to be cleared. |

| THROTTLING_QUOTA_EXCEEDED | 89 | True | The throttling quota has been exceeded. |

| PRODUCER_FENCED | 90 | False | There is a newer producer with the same transactionalId which fences the current one. |

| RESOURCE_NOT_FOUND | 91 | False | A request illegally referred to a resource that does not exist. |

| DUPLICATE_RESOURCE | 92 | False | A request illegally referred to the same resource twice. |

| UNACCEPTABLE_CREDENTIAL | 93 | False | Requested credential would not meet criteria for acceptability. |

| INCONSISTENT_VOTER_SET | 94 | False | Indicates that the either the sender or recipient of a voter-only request is not one of the expected voters |

| INVALID_UPDATE_VERSION | 95 | False | The given update version was invalid. |

| FEATURE_UPDATE_FAILED | 96 | False | Unable to update finalized features due to an unexpected server error. |

| PRINCIPAL_DESERIALIZATION_FAILURE | 97 | False | Request principal deserialization failed during forwarding. This indicates an internal error on the broker cluster security setup. |

| SNAPSHOT_NOT_FOUND | 98 | False | Requested snapshot was not found |

| POSITION_OUT_OF_RANGE | 99 | False | Requested position is not greater than or equal to zero, and less than the size of the snapshot. |

| UNKNOWN_TOPIC_ID | 100 | True | This server does not host this topic ID. |

| DUPLICATE_BROKER_REGISTRATION | 101 | False | This broker ID is already in use. |

| BROKER_ID_NOT_REGISTERED | 102 | False | The given broker ID was not registered. |

| INCONSISTENT_TOPIC_ID | 103 | True | The log's topic ID did not match the topic ID in the request |

| INCONSISTENT_CLUSTER_ID | 104 | False | The clusterId in the request does not match that found on the server |

| TRANSACTIONAL_ID_NOT_FOUND | 105 | False | The transactionalId could not be found |

| FETCH_SESSION_TOPIC_ID_ERROR | 106 | True | The fetch session encountered inconsistent topic ID usage |

| INELIGIBLE_REPLICA | 107 | False | The new ISR contains at least one ineligible replica. |

| NEW_LEADER_ELECTED | 108 | False | The AlterPartition request successfully updated the partition state but the leader has changed. |

| OFFSET_MOVED_TO_TIERED_STORAGE | 109 | False | The requested offset is moved to tiered storage. |

| FENCED_MEMBER_EPOCH | 110 | False | The member epoch is fenced by the group coordinator. The member must abandon all its partitions and rejoin. |

| UNRELEASED_INSTANCE_ID | 111 | False | The instance ID is still used by another member in the consumer group. That member must leave first. |

| UNSUPPORTED_ASSIGNOR | 112 | False | The assignor or its version range is not supported by the consumer group. |